ID2223 TensorFlow Lab 2¶

Tianze Wang

Table of contents¶

- A Quick Recap

- Introduction to Computer Vision

- Introduction to Convolutions

- Convolutional Neural Networks

- More Complex Images

Section 1: A Quick Recap¶

Consider the following sets of numbers. What is the relationship between them?

| x | y |

|---|---|

| -1 | -2 |

| 0 | 1 |

| 1 | 4 |

| 2 | 7 |

| 3 | 10 |

| 4 | 13 |

Now let's look at how to train a ML model to spot the patterns between these items of data.

import tensorflow as tf

import numpy as np

print(tf.__version__)

2.0.0

Prepare training Data¶

| x | y |

|---|---|

| -1 | -2 |

| 0 | 1 |

| 1 | 4 |

| 2 | 7 |

| 3 | 10 |

| 4 | 13 |

# training data

X_train = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

y_train = np.array([-2.0, 1.0, 4.0, 7.0, 10.0, 13.0], dtype=float)

Define the model¶

model = tf.keras.Sequential([tf.keras.layers.Dense(units=1, input_shape=[1])])

model.compile(optimizer='sgd', loss='mean_squared_error')

# plot the model

tf.keras.utils.plot_model(model)

# model summary

model.summary()

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense (Dense) (None, 1) 2 ================================================================= Total params: 2 Trainable params: 2 Non-trainable params: 0 _________________________________________________________________

Train and evaluate¶

model.fit(X_train, y_train, epochs=500)

Train on 6 samples Epoch 1/500 6/6 [==============================] - 0s 70ms/sample - loss: 100.6444 Epoch 2/500 6/6 [==============================] - 0s 301us/sample - loss: 79.1917 Epoch 3/500 6/6 [==============================] - 0s 247us/sample - loss: 62.3136 Epoch 4/500 6/6 [==============================] - 0s 237us/sample - loss: 49.0345 Epoch 5/500 6/6 [==============================] - 0s 258us/sample - loss: 38.5870 Epoch 6/500 6/6 [==============================] - 0s 255us/sample - loss: 30.3672 Epoch 7/500 6/6 [==============================] - 0s 253us/sample - loss: 23.9001 Epoch 8/500 6/6 [==============================] - 0s 240us/sample - loss: 18.8119 Epoch 9/500 6/6 [==============================] - 0s 252us/sample - loss: 14.8086 Epoch 10/500 6/6 [==============================] - 0s 232us/sample - loss: 11.6588 Epoch 11/500 6/6 [==============================] - 0s 232us/sample - loss: 9.1805 Epoch 12/500 6/6 [==============================] - 0s 227us/sample - loss: 7.2305 Epoch 13/500 6/6 [==============================] - 0s 263us/sample - loss: 5.6963 Epoch 14/500 6/6 [==============================] - 0s 282us/sample - loss: 4.4890 Epoch 15/500 6/6 [==============================] - 0s 245us/sample - loss: 3.5390 Epoch 16/500 6/6 [==============================] - 0s 230us/sample - loss: 2.7915 Epoch 17/500 6/6 [==============================] - 0s 308us/sample - loss: 2.2032 Epoch 18/500 6/6 [==============================] - 0s 279us/sample - loss: 1.7403 Epoch 19/500 6/6 [==============================] - 0s 279us/sample - loss: 1.3759 Epoch 20/500 6/6 [==============================] - 0s 274us/sample - loss: 1.0891 Epoch 21/500 6/6 [==============================] - 0s 277us/sample - loss: 0.8633 Epoch 22/500 6/6 [==============================] - 0s 319us/sample - loss: 0.6855 Epoch 23/500 6/6 [==============================] - 0s 322us/sample - loss: 0.5455 Epoch 24/500 6/6 [==============================] - 0s 301us/sample - loss: 0.4352 Epoch 25/500 6/6 [==============================] - 0s 302us/sample - loss: 0.3484 Epoch 26/500 6/6 [==============================] - 0s 434us/sample - loss: 0.2799 Epoch 27/500 6/6 [==============================] - 0s 456us/sample - loss: 0.2259 Epoch 28/500 6/6 [==============================] - 0s 314us/sample - loss: 0.1833 Epoch 29/500 6/6 [==============================] - 0s 301us/sample - loss: 0.1497 Epoch 30/500 6/6 [==============================] - 0s 306us/sample - loss: 0.1231 Epoch 31/500 6/6 [==============================] - 0s 241us/sample - loss: 0.1021 Epoch 32/500 6/6 [==============================] - 0s 337us/sample - loss: 0.0855 Epoch 33/500 6/6 [==============================] - 0s 299us/sample - loss: 0.0723 Epoch 34/500 6/6 [==============================] - 0s 301us/sample - loss: 0.0618 Epoch 35/500 6/6 [==============================] - 0s 310us/sample - loss: 0.0534 Epoch 36/500 6/6 [==============================] - 0s 341us/sample - loss: 0.0468 Epoch 37/500 6/6 [==============================] - 0s 324us/sample - loss: 0.0414 Epoch 38/500 6/6 [==============================] - 0s 303us/sample - loss: 0.0371 Epoch 39/500 6/6 [==============================] - 0s 301us/sample - loss: 0.0336 Epoch 40/500 6/6 [==============================] - 0s 293us/sample - loss: 0.0308 Epoch 41/500 6/6 [==============================] - 0s 325us/sample - loss: 0.0285 Epoch 42/500 6/6 [==============================] - 0s 328us/sample - loss: 0.0266 Epoch 43/500 6/6 [==============================] - 0s 363us/sample - loss: 0.0250 Epoch 44/500 6/6 [==============================] - 0s 302us/sample - loss: 0.0237 Epoch 45/500 6/6 [==============================] - 0s 292us/sample - loss: 0.0226 Epoch 46/500 6/6 [==============================] - 0s 348us/sample - loss: 0.0216 Epoch 47/500 6/6 [==============================] - 0s 336us/sample - loss: 0.0207 Epoch 48/500 6/6 [==============================] - 0s 309us/sample - loss: 0.0200 Epoch 49/500 6/6 [==============================] - 0s 343us/sample - loss: 0.0193 Epoch 50/500 6/6 [==============================] - 0s 309us/sample - loss: 0.0188 Epoch 51/500 6/6 [==============================] - 0s 270us/sample - loss: 0.0182 Epoch 52/500 6/6 [==============================] - 0s 255us/sample - loss: 0.0177 Epoch 53/500 6/6 [==============================] - 0s 255us/sample - loss: 0.0173 Epoch 54/500 6/6 [==============================] - 0s 390us/sample - loss: 0.0168 Epoch 55/500 6/6 [==============================] - 0s 453us/sample - loss: 0.0164 Epoch 56/500 6/6 [==============================] - 0s 309us/sample - loss: 0.0160 Epoch 57/500 6/6 [==============================] - 0s 282us/sample - loss: 0.0157 Epoch 58/500 6/6 [==============================] - 0s 256us/sample - loss: 0.0153 Epoch 59/500 6/6 [==============================] - 0s 245us/sample - loss: 0.0150 Epoch 60/500 6/6 [==============================] - 0s 247us/sample - loss: 0.0147 Epoch 61/500 6/6 [==============================] - 0s 222us/sample - loss: 0.0144 Epoch 62/500 6/6 [==============================] - 0s 256us/sample - loss: 0.0140 Epoch 63/500 6/6 [==============================] - 0s 225us/sample - loss: 0.0138 Epoch 64/500 6/6 [==============================] - 0s 304us/sample - loss: 0.0135 Epoch 65/500 6/6 [==============================] - 0s 265us/sample - loss: 0.0132 Epoch 66/500 6/6 [==============================] - 0s 247us/sample - loss: 0.0129 Epoch 67/500 6/6 [==============================] - 0s 236us/sample - loss: 0.0126 Epoch 68/500 6/6 [==============================] - 0s 228us/sample - loss: 0.0124 Epoch 69/500 6/6 [==============================] - 0s 223us/sample - loss: 0.0121 Epoch 70/500 6/6 [==============================] - 0s 222us/sample - loss: 0.0119 Epoch 71/500 6/6 [==============================] - 0s 223us/sample - loss: 0.0116 Epoch 72/500 6/6 [==============================] - 0s 256us/sample - loss: 0.0114 Epoch 73/500 6/6 [==============================] - 0s 254us/sample - loss: 0.0111 Epoch 74/500 6/6 [==============================] - 0s 269us/sample - loss: 0.0109 Epoch 75/500 6/6 [==============================] - 0s 225us/sample - loss: 0.0107 Epoch 76/500 6/6 [==============================] - 0s 232us/sample - loss: 0.0105 Epoch 77/500 6/6 [==============================] - 0s 231us/sample - loss: 0.0103 Epoch 78/500 6/6 [==============================] - 0s 248us/sample - loss: 0.0100 Epoch 79/500 6/6 [==============================] - 0s 228us/sample - loss: 0.0098 Epoch 80/500 6/6 [==============================] - 0s 232us/sample - loss: 0.0096 Epoch 81/500 6/6 [==============================] - 0s 246us/sample - loss: 0.0094 Epoch 82/500 6/6 [==============================] - 0s 231us/sample - loss: 0.0092 Epoch 83/500 6/6 [==============================] - 0s 250us/sample - loss: 0.0091 Epoch 84/500 6/6 [==============================] - 0s 255us/sample - loss: 0.0089 Epoch 85/500 6/6 [==============================] - 0s 243us/sample - loss: 0.0087 Epoch 86/500 6/6 [==============================] - 0s 254us/sample - loss: 0.0085 Epoch 87/500 6/6 [==============================] - 0s 248us/sample - loss: 0.0083 Epoch 88/500 6/6 [==============================] - 0s 300us/sample - loss: 0.0082 Epoch 89/500 6/6 [==============================] - 0s 273us/sample - loss: 0.0080 Epoch 90/500 6/6 [==============================] - 0s 245us/sample - loss: 0.0078 Epoch 91/500 6/6 [==============================] - 0s 278us/sample - loss: 0.0077 Epoch 92/500 6/6 [==============================] - 0s 302us/sample - loss: 0.0075 Epoch 93/500 6/6 [==============================] - 0s 304us/sample - loss: 0.0074 Epoch 94/500 6/6 [==============================] - 0s 320us/sample - loss: 0.0072 Epoch 95/500 6/6 [==============================] - 0s 308us/sample - loss: 0.0071 Epoch 96/500 6/6 [==============================] - 0s 359us/sample - loss: 0.0069 Epoch 97/500 6/6 [==============================] - 0s 367us/sample - loss: 0.0068 Epoch 98/500 6/6 [==============================] - 0s 319us/sample - loss: 0.0066 Epoch 99/500 6/6 [==============================] - 0s 310us/sample - loss: 0.0065 Epoch 100/500 6/6 [==============================] - 0s 296us/sample - loss: 0.0064 Epoch 101/500 6/6 [==============================] - 0s 369us/sample - loss: 0.0062 Epoch 102/500 6/6 [==============================] - 0s 352us/sample - loss: 0.0061 Epoch 103/500 6/6 [==============================] - 0s 306us/sample - loss: 0.0060 Epoch 104/500 6/6 [==============================] - 0s 302us/sample - loss: 0.0059 Epoch 105/500 6/6 [==============================] - 0s 327us/sample - loss: 0.0057 Epoch 106/500 6/6 [==============================] - 0s 317us/sample - loss: 0.0056 Epoch 107/500 6/6 [==============================] - 0s 273us/sample - loss: 0.0055 Epoch 108/500 6/6 [==============================] - 0s 290us/sample - loss: 0.0054 Epoch 109/500 6/6 [==============================] - 0s 340us/sample - loss: 0.0053 Epoch 110/500 6/6 [==============================] - 0s 350us/sample - loss: 0.0052 Epoch 111/500 6/6 [==============================] - 0s 347us/sample - loss: 0.0051 Epoch 112/500 6/6 [==============================] - 0s 334us/sample - loss: 0.0050 Epoch 113/500 6/6 [==============================] - 0s 273us/sample - loss: 0.0049 Epoch 114/500 6/6 [==============================] - 0s 290us/sample - loss: 0.0048 Epoch 115/500 6/6 [==============================] - 0s 257us/sample - loss: 0.0047 Epoch 116/500 6/6 [==============================] - 0s 363us/sample - loss: 0.0046 Epoch 117/500 6/6 [==============================] - 0s 404us/sample - loss: 0.0045 Epoch 118/500 6/6 [==============================] - 0s 289us/sample - loss: 0.0044 Epoch 119/500 6/6 [==============================] - 0s 268us/sample - loss: 0.0043 Epoch 120/500 6/6 [==============================] - 0s 247us/sample - loss: 0.0042 Epoch 121/500 6/6 [==============================] - 0s 261us/sample - loss: 0.0041 Epoch 122/500 6/6 [==============================] - 0s 263us/sample - loss: 0.0040 Epoch 123/500 6/6 [==============================] - 0s 296us/sample - loss: 0.0039 Epoch 124/500 6/6 [==============================] - 0s 310us/sample - loss: 0.0039 Epoch 125/500 6/6 [==============================] - 0s 343us/sample - loss: 0.0038 Epoch 126/500 6/6 [==============================] - 0s 263us/sample - loss: 0.0037 Epoch 127/500 6/6 [==============================] - 0s 243us/sample - loss: 0.0036 Epoch 128/500 6/6 [==============================] - 0s 263us/sample - loss: 0.0036 Epoch 129/500 6/6 [==============================] - 0s 311us/sample - loss: 0.0035 Epoch 130/500 6/6 [==============================] - 0s 353us/sample - loss: 0.0034 Epoch 131/500 6/6 [==============================] - 0s 284us/sample - loss: 0.0033 Epoch 132/500 6/6 [==============================] - 0s 266us/sample - loss: 0.0033 Epoch 133/500 6/6 [==============================] - 0s 262us/sample - loss: 0.0032 Epoch 134/500 6/6 [==============================] - 0s 261us/sample - loss: 0.0031 Epoch 135/500 6/6 [==============================] - 0s 291us/sample - loss: 0.0031 Epoch 136/500 6/6 [==============================] - 0s 305us/sample - loss: 0.0030 Epoch 137/500 6/6 [==============================] - 0s 295us/sample - loss: 0.0030 Epoch 138/500 6/6 [==============================] - 0s 281us/sample - loss: 0.0029 Epoch 139/500 6/6 [==============================] - 0s 296us/sample - loss: 0.0028 Epoch 140/500 6/6 [==============================] - 0s 263us/sample - loss: 0.0028 Epoch 141/500 6/6 [==============================] - 0s 290us/sample - loss: 0.0027 Epoch 142/500 6/6 [==============================] - 0s 282us/sample - loss: 0.0027 Epoch 143/500 6/6 [==============================] - 0s 295us/sample - loss: 0.0026 Epoch 144/500 6/6 [==============================] - 0s 284us/sample - loss: 0.0026 Epoch 145/500 6/6 [==============================] - 0s 287us/sample - loss: 0.0025 Epoch 146/500 6/6 [==============================] - 0s 298us/sample - loss: 0.0024 Epoch 147/500 6/6 [==============================] - 0s 265us/sample - loss: 0.0024 Epoch 148/500 6/6 [==============================] - 0s 279us/sample - loss: 0.0024 Epoch 149/500 6/6 [==============================] - 0s 293us/sample - loss: 0.0023 Epoch 150/500 6/6 [==============================] - 0s 269us/sample - loss: 0.0023 Epoch 151/500 6/6 [==============================] - 0s 255us/sample - loss: 0.0022 Epoch 152/500 6/6 [==============================] - 0s 255us/sample - loss: 0.0022 Epoch 153/500 6/6 [==============================] - 0s 263us/sample - loss: 0.0021 Epoch 154/500 6/6 [==============================] - 0s 251us/sample - loss: 0.0021 Epoch 155/500 6/6 [==============================] - 0s 270us/sample - loss: 0.0020 Epoch 156/500 6/6 [==============================] - 0s 252us/sample - loss: 0.0020 Epoch 157/500 6/6 [==============================] - 0s 277us/sample - loss: 0.0019 Epoch 158/500 6/6 [==============================] - 0s 264us/sample - loss: 0.0019 Epoch 159/500 6/6 [==============================] - 0s 287us/sample - loss: 0.0019 Epoch 160/500 6/6 [==============================] - 0s 375us/sample - loss: 0.0018 Epoch 161/500 6/6 [==============================] - 0s 374us/sample - loss: 0.0018 Epoch 162/500 6/6 [==============================] - 0s 343us/sample - loss: 0.0018 Epoch 163/500 6/6 [==============================] - 0s 361us/sample - loss: 0.0017 Epoch 164/500 6/6 [==============================] - 0s 289us/sample - loss: 0.0017 Epoch 165/500 6/6 [==============================] - 0s 307us/sample - loss: 0.0017 Epoch 166/500 6/6 [==============================] - 0s 309us/sample - loss: 0.0016 Epoch 167/500 6/6 [==============================] - 0s 418us/sample - loss: 0.0016 Epoch 168/500 6/6 [==============================] - 0s 337us/sample - loss: 0.0016 Epoch 169/500 6/6 [==============================] - 0s 359us/sample - loss: 0.0015 Epoch 170/500 6/6 [==============================] - 0s 379us/sample - loss: 0.0015 Epoch 171/500 6/6 [==============================] - 0s 367us/sample - loss: 0.0015 Epoch 172/500 6/6 [==============================] - 0s 445us/sample - loss: 0.0014 Epoch 173/500 6/6 [==============================] - 0s 440us/sample - loss: 0.0014 Epoch 174/500 6/6 [==============================] - 0s 403us/sample - loss: 0.0014 Epoch 175/500 6/6 [==============================] - 0s 387us/sample - loss: 0.0013 Epoch 176/500 6/6 [==============================] - 0s 386us/sample - loss: 0.0013 Epoch 177/500 6/6 [==============================] - 0s 316us/sample - loss: 0.0013 Epoch 178/500 6/6 [==============================] - 0s 299us/sample - loss: 0.0013 Epoch 179/500 6/6 [==============================] - 0s 321us/sample - loss: 0.0012 Epoch 180/500 6/6 [==============================] - 0s 308us/sample - loss: 0.0012 Epoch 181/500 6/6 [==============================] - 0s 313us/sample - loss: 0.0012 Epoch 182/500 6/6 [==============================] - 0s 371us/sample - loss: 0.0012 Epoch 183/500 6/6 [==============================] - 0s 284us/sample - loss: 0.0011 Epoch 184/500 6/6 [==============================] - 0s 273us/sample - loss: 0.0011 Epoch 185/500 6/6 [==============================] - 0s 257us/sample - loss: 0.0011 Epoch 186/500 6/6 [==============================] - 0s 290us/sample - loss: 0.0011 Epoch 187/500 6/6 [==============================] - 0s 279us/sample - loss: 0.0010 Epoch 188/500 6/6 [==============================] - 0s 269us/sample - loss: 0.0010 Epoch 189/500 6/6 [==============================] - 0s 257us/sample - loss: 0.0010 Epoch 190/500 6/6 [==============================] - 0s 264us/sample - loss: 9.8296e-04 Epoch 191/500 6/6 [==============================] - 0s 268us/sample - loss: 9.6277e-04 Epoch 192/500 6/6 [==============================] - 0s 268us/sample - loss: 9.4299e-04 Epoch 193/500 6/6 [==============================] - 0s 255us/sample - loss: 9.2363e-04 Epoch 194/500 6/6 [==============================] - 0s 276us/sample - loss: 9.0465e-04 Epoch 195/500 6/6 [==============================] - 0s 283us/sample - loss: 8.8608e-04 Epoch 196/500 6/6 [==============================] - 0s 300us/sample - loss: 8.6787e-04 Epoch 197/500 6/6 [==============================] - 0s 262us/sample - loss: 8.5005e-04 Epoch 198/500 6/6 [==============================] - 0s 305us/sample - loss: 8.3259e-04 Epoch 199/500 6/6 [==============================] - 0s 342us/sample - loss: 8.1549e-04 Epoch 200/500 6/6 [==============================] - 0s 307us/sample - loss: 7.9874e-04 Epoch 201/500 6/6 [==============================] - 0s 261us/sample - loss: 7.8233e-04 Epoch 202/500 6/6 [==============================] - 0s 317us/sample - loss: 7.6626e-04 Epoch 203/500 6/6 [==============================] - 0s 297us/sample - loss: 7.5052e-04 Epoch 204/500 6/6 [==============================] - 0s 268us/sample - loss: 7.3510e-04 Epoch 205/500 6/6 [==============================] - 0s 346us/sample - loss: 7.2000e-04 Epoch 206/500 6/6 [==============================] - 0s 358us/sample - loss: 7.0521e-04 Epoch 207/500 6/6 [==============================] - 0s 315us/sample - loss: 6.9073e-04 Epoch 208/500 6/6 [==============================] - 0s 308us/sample - loss: 6.7654e-04 Epoch 209/500 6/6 [==============================] - 0s 292us/sample - loss: 6.6265e-04 Epoch 210/500 6/6 [==============================] - 0s 297us/sample - loss: 6.4903e-04 Epoch 211/500 6/6 [==============================] - 0s 283us/sample - loss: 6.3571e-04 Epoch 212/500 6/6 [==============================] - 0s 324us/sample - loss: 6.2265e-04 Epoch 213/500 6/6 [==============================] - 0s 260us/sample - loss: 6.0986e-04 Epoch 214/500 6/6 [==============================] - 0s 269us/sample - loss: 5.9733e-04 Epoch 215/500 6/6 [==============================] - 0s 262us/sample - loss: 5.8506e-04 Epoch 216/500 6/6 [==============================] - 0s 266us/sample - loss: 5.7305e-04 Epoch 217/500 6/6 [==============================] - 0s 263us/sample - loss: 5.6127e-04 Epoch 218/500 6/6 [==============================] - 0s 270us/sample - loss: 5.4974e-04 Epoch 219/500 6/6 [==============================] - 0s 260us/sample - loss: 5.3845e-04 Epoch 220/500 6/6 [==============================] - 0s 261us/sample - loss: 5.2739e-04 Epoch 221/500 6/6 [==============================] - 0s 270us/sample - loss: 5.1656e-04 Epoch 222/500 6/6 [==============================] - 0s 281us/sample - loss: 5.0595e-04 Epoch 223/500 6/6 [==============================] - 0s 308us/sample - loss: 4.9556e-04 Epoch 224/500 6/6 [==============================] - 0s 290us/sample - loss: 4.8538e-04 Epoch 225/500 6/6 [==============================] - 0s 261us/sample - loss: 4.7541e-04 Epoch 226/500 6/6 [==============================] - 0s 259us/sample - loss: 4.6565e-04 Epoch 227/500 6/6 [==============================] - 0s 253us/sample - loss: 4.5608e-04 Epoch 228/500 6/6 [==============================] - 0s 241us/sample - loss: 4.4671e-04 Epoch 229/500 6/6 [==============================] - 0s 276us/sample - loss: 4.3754e-04 Epoch 230/500 6/6 [==============================] - 0s 293us/sample - loss: 4.2855e-04 Epoch 231/500 6/6 [==============================] - 0s 267us/sample - loss: 4.1975e-04 Epoch 232/500 6/6 [==============================] - 0s 306us/sample - loss: 4.1113e-04 Epoch 233/500 6/6 [==============================] - 0s 309us/sample - loss: 4.0268e-04 Epoch 234/500 6/6 [==============================] - 0s 277us/sample - loss: 3.9441e-04 Epoch 235/500 6/6 [==============================] - 0s 324us/sample - loss: 3.8631e-04 Epoch 236/500 6/6 [==============================] - 0s 298us/sample - loss: 3.7837e-04 Epoch 237/500 6/6 [==============================] - 0s 332us/sample - loss: 3.7060e-04 Epoch 238/500 6/6 [==============================] - 0s 277us/sample - loss: 3.6299e-04 Epoch 239/500 6/6 [==============================] - 0s 292us/sample - loss: 3.5553e-04 Epoch 240/500 6/6 [==============================] - 0s 296us/sample - loss: 3.4823e-04 Epoch 241/500 6/6 [==============================] - 0s 283us/sample - loss: 3.4108e-04 Epoch 242/500 6/6 [==============================] - 0s 281us/sample - loss: 3.3407e-04 Epoch 243/500 6/6 [==============================] - 0s 276us/sample - loss: 3.2721e-04 Epoch 244/500 6/6 [==============================] - 0s 291us/sample - loss: 3.2049e-04 Epoch 245/500 6/6 [==============================] - 0s 314us/sample - loss: 3.1390e-04 Epoch 246/500 6/6 [==============================] - 0s 315us/sample - loss: 3.0745e-04 Epoch 247/500 6/6 [==============================] - 0s 299us/sample - loss: 3.0114e-04 Epoch 248/500 6/6 [==============================] - 0s 265us/sample - loss: 2.9495e-04 Epoch 249/500 6/6 [==============================] - 0s 288us/sample - loss: 2.8889e-04 Epoch 250/500 6/6 [==============================] - 0s 281us/sample - loss: 2.8296e-04 Epoch 251/500 6/6 [==============================] - 0s 261us/sample - loss: 2.7715e-04 Epoch 252/500 6/6 [==============================] - 0s 291us/sample - loss: 2.7146e-04 Epoch 253/500 6/6 [==============================] - 0s 327us/sample - loss: 2.6588e-04 Epoch 254/500 6/6 [==============================] - 0s 325us/sample - loss: 2.6042e-04 Epoch 255/500 6/6 [==============================] - 0s 308us/sample - loss: 2.5507e-04 Epoch 256/500 6/6 [==============================] - 0s 336us/sample - loss: 2.4983e-04 Epoch 257/500 6/6 [==============================] - 0s 408us/sample - loss: 2.4470e-04 Epoch 258/500 6/6 [==============================] - 0s 354us/sample - loss: 2.3967e-04 Epoch 259/500 6/6 [==============================] - 0s 394us/sample - loss: 2.3475e-04 Epoch 260/500 6/6 [==============================] - 0s 307us/sample - loss: 2.2993e-04 Epoch 261/500 6/6 [==============================] - 0s 268us/sample - loss: 2.2520e-04 Epoch 262/500 6/6 [==============================] - 0s 302us/sample - loss: 2.2058e-04 Epoch 263/500 6/6 [==============================] - 0s 319us/sample - loss: 2.1605e-04 Epoch 264/500 6/6 [==============================] - 0s 266us/sample - loss: 2.1161e-04 Epoch 265/500 6/6 [==============================] - 0s 308us/sample - loss: 2.0726e-04 Epoch 266/500 6/6 [==============================] - 0s 297us/sample - loss: 2.0300e-04 Epoch 267/500 6/6 [==============================] - 0s 291us/sample - loss: 1.9883e-04 Epoch 268/500 6/6 [==============================] - 0s 286us/sample - loss: 1.9475e-04 Epoch 269/500 6/6 [==============================] - 0s 269us/sample - loss: 1.9075e-04 Epoch 270/500 6/6 [==============================] - 0s 273us/sample - loss: 1.8683e-04 Epoch 271/500 6/6 [==============================] - 0s 299us/sample - loss: 1.8299e-04 Epoch 272/500 6/6 [==============================] - 0s 298us/sample - loss: 1.7923e-04 Epoch 273/500 6/6 [==============================] - 0s 259us/sample - loss: 1.7555e-04 Epoch 274/500 6/6 [==============================] - 0s 317us/sample - loss: 1.7194e-04 Epoch 275/500 6/6 [==============================] - 0s 295us/sample - loss: 1.6841e-04 Epoch 276/500 6/6 [==============================] - 0s 279us/sample - loss: 1.6495e-04 Epoch 277/500 6/6 [==============================] - 0s 249us/sample - loss: 1.6156e-04 Epoch 278/500 6/6 [==============================] - 0s 273us/sample - loss: 1.5825e-04 Epoch 279/500 6/6 [==============================] - 0s 268us/sample - loss: 1.5500e-04 Epoch 280/500 6/6 [==============================] - 0s 261us/sample - loss: 1.5181e-04 Epoch 281/500 6/6 [==============================] - 0s 252us/sample - loss: 1.4870e-04 Epoch 282/500 6/6 [==============================] - 0s 284us/sample - loss: 1.4564e-04 Epoch 283/500 6/6 [==============================] - 0s 256us/sample - loss: 1.4265e-04 Epoch 284/500 6/6 [==============================] - 0s 263us/sample - loss: 1.3972e-04 Epoch 285/500 6/6 [==============================] - 0s 535us/sample - loss: 1.3685e-04 Epoch 286/500 6/6 [==============================] - 0s 566us/sample - loss: 1.3404e-04 Epoch 287/500 6/6 [==============================] - 0s 331us/sample - loss: 1.3129e-04 Epoch 288/500 6/6 [==============================] - 0s 296us/sample - loss: 1.2859e-04 Epoch 289/500 6/6 [==============================] - 0s 296us/sample - loss: 1.2595e-04 Epoch 290/500 6/6 [==============================] - 0s 296us/sample - loss: 1.2336e-04 Epoch 291/500 6/6 [==============================] - 0s 302us/sample - loss: 1.2083e-04 Epoch 292/500 6/6 [==============================] - 0s 297us/sample - loss: 1.1835e-04 Epoch 293/500 6/6 [==============================] - 0s 295us/sample - loss: 1.1592e-04 Epoch 294/500 6/6 [==============================] - 0s 298us/sample - loss: 1.1354e-04 Epoch 295/500 6/6 [==============================] - 0s 297us/sample - loss: 1.1121e-04 Epoch 296/500 6/6 [==============================] - 0s 388us/sample - loss: 1.0892e-04 Epoch 297/500 6/6 [==============================] - 0s 286us/sample - loss: 1.0668e-04 Epoch 298/500 6/6 [==============================] - 0s 318us/sample - loss: 1.0449e-04 Epoch 299/500 6/6 [==============================] - 0s 297us/sample - loss: 1.0235e-04 Epoch 300/500 6/6 [==============================] - 0s 297us/sample - loss: 1.0025e-04 Epoch 301/500 6/6 [==============================] - 0s 302us/sample - loss: 9.8187e-05 Epoch 302/500 6/6 [==============================] - 0s 272us/sample - loss: 9.6170e-05 Epoch 303/500 6/6 [==============================] - 0s 277us/sample - loss: 9.4195e-05 Epoch 304/500 6/6 [==============================] - 0s 292us/sample - loss: 9.2259e-05 Epoch 305/500 6/6 [==============================] - 0s 285us/sample - loss: 9.0364e-05 Epoch 306/500 6/6 [==============================] - 0s 299us/sample - loss: 8.8510e-05 Epoch 307/500 6/6 [==============================] - 0s 274us/sample - loss: 8.6692e-05 Epoch 308/500 6/6 [==============================] - 0s 270us/sample - loss: 8.4912e-05 Epoch 309/500 6/6 [==============================] - 0s 271us/sample - loss: 8.3167e-05 Epoch 310/500 6/6 [==============================] - 0s 289us/sample - loss: 8.1459e-05 Epoch 311/500 6/6 [==============================] - 0s 271us/sample - loss: 7.9786e-05 Epoch 312/500 6/6 [==============================] - 0s 271us/sample - loss: 7.8146e-05 Epoch 313/500 6/6 [==============================] - 0s 302us/sample - loss: 7.6542e-05 Epoch 314/500 6/6 [==============================] - 0s 309us/sample - loss: 7.4969e-05 Epoch 315/500 6/6 [==============================] - 0s 280us/sample - loss: 7.3428e-05 Epoch 316/500 6/6 [==============================] - 0s 303us/sample - loss: 7.1921e-05 Epoch 317/500 6/6 [==============================] - 0s 297us/sample - loss: 7.0444e-05 Epoch 318/500 6/6 [==============================] - 0s 282us/sample - loss: 6.8996e-05 Epoch 319/500 6/6 [==============================] - 0s 300us/sample - loss: 6.7578e-05 Epoch 320/500 6/6 [==============================] - 0s 299us/sample - loss: 6.6192e-05 Epoch 321/500 6/6 [==============================] - 0s 275us/sample - loss: 6.4832e-05 Epoch 322/500 6/6 [==============================] - 0s 288us/sample - loss: 6.3500e-05 Epoch 323/500 6/6 [==============================] - 0s 302us/sample - loss: 6.2197e-05 Epoch 324/500 6/6 [==============================] - 0s 320us/sample - loss: 6.0918e-05 Epoch 325/500 6/6 [==============================] - 0s 762us/sample - loss: 5.9667e-05 Epoch 326/500 6/6 [==============================] - 0s 324us/sample - loss: 5.8441e-05 Epoch 327/500 6/6 [==============================] - 0s 303us/sample - loss: 5.7241e-05 Epoch 328/500 6/6 [==============================] - 0s 320us/sample - loss: 5.6066e-05 Epoch 329/500 6/6 [==============================] - 0s 291us/sample - loss: 5.4913e-05 Epoch 330/500 6/6 [==============================] - 0s 267us/sample - loss: 5.3785e-05 Epoch 331/500 6/6 [==============================] - 0s 296us/sample - loss: 5.2681e-05 Epoch 332/500 6/6 [==============================] - 0s 301us/sample - loss: 5.1599e-05 Epoch 333/500 6/6 [==============================] - 0s 300us/sample - loss: 5.0538e-05 Epoch 334/500 6/6 [==============================] - 0s 280us/sample - loss: 4.9500e-05 Epoch 335/500 6/6 [==============================] - 0s 288us/sample - loss: 4.8484e-05 Epoch 336/500 6/6 [==============================] - 0s 268us/sample - loss: 4.7488e-05 Epoch 337/500 6/6 [==============================] - 0s 266us/sample - loss: 4.6512e-05 Epoch 338/500 6/6 [==============================] - 0s 261us/sample - loss: 4.5556e-05 Epoch 339/500 6/6 [==============================] - 0s 249us/sample - loss: 4.4621e-05 Epoch 340/500 6/6 [==============================] - 0s 264us/sample - loss: 4.3704e-05 Epoch 341/500 6/6 [==============================] - 0s 278us/sample - loss: 4.2808e-05 Epoch 342/500 6/6 [==============================] - 0s 285us/sample - loss: 4.1927e-05 Epoch 343/500 6/6 [==============================] - 0s 268us/sample - loss: 4.1066e-05 Epoch 344/500 6/6 [==============================] - 0s 277us/sample - loss: 4.0223e-05 Epoch 345/500 6/6 [==============================] - 0s 262us/sample - loss: 3.9397e-05 Epoch 346/500 6/6 [==============================] - 0s 273us/sample - loss: 3.8588e-05 Epoch 347/500 6/6 [==============================] - 0s 307us/sample - loss: 3.7794e-05 Epoch 348/500 6/6 [==============================] - 0s 297us/sample - loss: 3.7018e-05 Epoch 349/500 6/6 [==============================] - 0s 316us/sample - loss: 3.6257e-05 Epoch 350/500 6/6 [==============================] - 0s 289us/sample - loss: 3.5513e-05 Epoch 351/500 6/6 [==============================] - 0s 274us/sample - loss: 3.4783e-05 Epoch 352/500 6/6 [==============================] - 0s 311us/sample - loss: 3.4068e-05 Epoch 353/500 6/6 [==============================] - 0s 271us/sample - loss: 3.3367e-05 Epoch 354/500 6/6 [==============================] - 0s 286us/sample - loss: 3.2681e-05 Epoch 355/500 6/6 [==============================] - 0s 281us/sample - loss: 3.2010e-05 Epoch 356/500 6/6 [==============================] - 0s 306us/sample - loss: 3.1352e-05 Epoch 357/500 6/6 [==============================] - 0s 342us/sample - loss: 3.0709e-05 Epoch 358/500 6/6 [==============================] - 0s 333us/sample - loss: 3.0078e-05 Epoch 359/500 6/6 [==============================] - 0s 300us/sample - loss: 2.9461e-05 Epoch 360/500 6/6 [==============================] - 0s 301us/sample - loss: 2.8855e-05 Epoch 361/500 6/6 [==============================] - 0s 271us/sample - loss: 2.8264e-05 Epoch 362/500 6/6 [==============================] - 0s 280us/sample - loss: 2.7682e-05 Epoch 363/500 6/6 [==============================] - 0s 293us/sample - loss: 2.7114e-05 Epoch 364/500 6/6 [==============================] - 0s 268us/sample - loss: 2.6558e-05 Epoch 365/500 6/6 [==============================] - 0s 308us/sample - loss: 2.6013e-05 Epoch 366/500 6/6 [==============================] - 0s 326us/sample - loss: 2.5479e-05 Epoch 367/500 6/6 [==============================] - 0s 273us/sample - loss: 2.4954e-05 Epoch 368/500 6/6 [==============================] - 0s 293us/sample - loss: 2.4443e-05 Epoch 369/500 6/6 [==============================] - 0s 363us/sample - loss: 2.3941e-05 Epoch 370/500 6/6 [==============================] - 0s 262us/sample - loss: 2.3448e-05 Epoch 371/500 6/6 [==============================] - 0s 265us/sample - loss: 2.2966e-05 Epoch 372/500 6/6 [==============================] - 0s 311us/sample - loss: 2.2494e-05 Epoch 373/500 6/6 [==============================] - 0s 305us/sample - loss: 2.2032e-05 Epoch 374/500 6/6 [==============================] - 0s 277us/sample - loss: 2.1579e-05 Epoch 375/500 6/6 [==============================] - 0s 276us/sample - loss: 2.1136e-05 Epoch 376/500 6/6 [==============================] - 0s 381us/sample - loss: 2.0702e-05 Epoch 377/500 6/6 [==============================] - 0s 259us/sample - loss: 2.0276e-05 Epoch 378/500 6/6 [==============================] - 0s 292us/sample - loss: 1.9860e-05 Epoch 379/500 6/6 [==============================] - 0s 269us/sample - loss: 1.9452e-05 Epoch 380/500 6/6 [==============================] - 0s 264us/sample - loss: 1.9053e-05 Epoch 381/500 6/6 [==============================] - 0s 258us/sample - loss: 1.8661e-05 Epoch 382/500 6/6 [==============================] - 0s 284us/sample - loss: 1.8277e-05 Epoch 383/500 6/6 [==============================] - 0s 332us/sample - loss: 1.7902e-05 Epoch 384/500 6/6 [==============================] - 0s 291us/sample - loss: 1.7535e-05 Epoch 385/500 6/6 [==============================] - 0s 300us/sample - loss: 1.7175e-05 Epoch 386/500 6/6 [==============================] - 0s 273us/sample - loss: 1.6822e-05 Epoch 387/500 6/6 [==============================] - 0s 282us/sample - loss: 1.6477e-05 Epoch 388/500 6/6 [==============================] - 0s 261us/sample - loss: 1.6138e-05 Epoch 389/500 6/6 [==============================] - 0s 323us/sample - loss: 1.5807e-05 Epoch 390/500 6/6 [==============================] - 0s 296us/sample - loss: 1.5482e-05 Epoch 391/500 6/6 [==============================] - 0s 262us/sample - loss: 1.5164e-05 Epoch 392/500 6/6 [==============================] - 0s 263us/sample - loss: 1.4852e-05 Epoch 393/500 6/6 [==============================] - 0s 311us/sample - loss: 1.4547e-05 Epoch 394/500 6/6 [==============================] - 0s 292us/sample - loss: 1.4248e-05 Epoch 395/500 6/6 [==============================] - 0s 267us/sample - loss: 1.3955e-05 Epoch 396/500 6/6 [==============================] - 0s 277us/sample - loss: 1.3669e-05 Epoch 397/500 6/6 [==============================] - 0s 299us/sample - loss: 1.3388e-05 Epoch 398/500 6/6 [==============================] - 0s 278us/sample - loss: 1.3113e-05 Epoch 399/500 6/6 [==============================] - 0s 325us/sample - loss: 1.2844e-05 Epoch 400/500 6/6 [==============================] - 0s 292us/sample - loss: 1.2580e-05 Epoch 401/500 6/6 [==============================] - 0s 316us/sample - loss: 1.2321e-05 Epoch 402/500 6/6 [==============================] - 0s 304us/sample - loss: 1.2068e-05 Epoch 403/500 6/6 [==============================] - 0s 299us/sample - loss: 1.1820e-05 Epoch 404/500 6/6 [==============================] - 0s 289us/sample - loss: 1.1578e-05 Epoch 405/500 6/6 [==============================] - 0s 284us/sample - loss: 1.1339e-05 Epoch 406/500 6/6 [==============================] - 0s 298us/sample - loss: 1.1107e-05 Epoch 407/500 6/6 [==============================] - 0s 282us/sample - loss: 1.0879e-05 Epoch 408/500 6/6 [==============================] - 0s 358us/sample - loss: 1.0656e-05 Epoch 409/500 6/6 [==============================] - 0s 283us/sample - loss: 1.0437e-05 Epoch 410/500 6/6 [==============================] - 0s 309us/sample - loss: 1.0222e-05 Epoch 411/500 6/6 [==============================] - 0s 298us/sample - loss: 1.0012e-05 Epoch 412/500 6/6 [==============================] - 0s 266us/sample - loss: 9.8067e-06 Epoch 413/500 6/6 [==============================] - 0s 301us/sample - loss: 9.6053e-06 Epoch 414/500 6/6 [==============================] - 0s 311us/sample - loss: 9.4078e-06 Epoch 415/500 6/6 [==============================] - 0s 263us/sample - loss: 9.2146e-06 Epoch 416/500 6/6 [==============================] - 0s 280us/sample - loss: 9.0254e-06 Epoch 417/500 6/6 [==============================] - 0s 300us/sample - loss: 8.8398e-06 Epoch 418/500 6/6 [==============================] - 0s 298us/sample - loss: 8.6581e-06 Epoch 419/500 6/6 [==============================] - 0s 295us/sample - loss: 8.4800e-06 Epoch 420/500 6/6 [==============================] - 0s 332us/sample - loss: 8.3060e-06 Epoch 421/500 6/6 [==============================] - 0s 303us/sample - loss: 8.1354e-06 Epoch 422/500 6/6 [==============================] - 0s 332us/sample - loss: 7.9680e-06 Epoch 423/500 6/6 [==============================] - 0s 302us/sample - loss: 7.8045e-06 Epoch 424/500 6/6 [==============================] - 0s 423us/sample - loss: 7.6443e-06 Epoch 425/500 6/6 [==============================] - 0s 334us/sample - loss: 7.4876e-06 Epoch 426/500 6/6 [==============================] - 0s 293us/sample - loss: 7.3334e-06 Epoch 427/500 6/6 [==============================] - 0s 350us/sample - loss: 7.1826e-06 Epoch 428/500 6/6 [==============================] - 0s 334us/sample - loss: 7.0354e-06 Epoch 429/500 6/6 [==============================] - 0s 312us/sample - loss: 6.8906e-06 Epoch 430/500 6/6 [==============================] - 0s 348us/sample - loss: 6.7492e-06 Epoch 431/500 6/6 [==============================] - 0s 365us/sample - loss: 6.6107e-06 Epoch 432/500 6/6 [==============================] - 0s 326us/sample - loss: 6.4748e-06 Epoch 433/500 6/6 [==============================] - 0s 380us/sample - loss: 6.3417e-06 Epoch 434/500 6/6 [==============================] - 0s 259us/sample - loss: 6.2116e-06 Epoch 435/500 6/6 [==============================] - 0s 349us/sample - loss: 6.0838e-06 Epoch 436/500 6/6 [==============================] - 0s 305us/sample - loss: 5.9587e-06 Epoch 437/500 6/6 [==============================] - 0s 257us/sample - loss: 5.8363e-06 Epoch 438/500 6/6 [==============================] - 0s 346us/sample - loss: 5.7165e-06 Epoch 439/500 6/6 [==============================] - 0s 350us/sample - loss: 5.5994e-06 Epoch 440/500 6/6 [==============================] - 0s 313us/sample - loss: 5.4842e-06 Epoch 441/500 6/6 [==============================] - 0s 333us/sample - loss: 5.3715e-06 Epoch 442/500 6/6 [==============================] - 0s 329us/sample - loss: 5.2610e-06 Epoch 443/500 6/6 [==============================] - 0s 311us/sample - loss: 5.1530e-06 Epoch 444/500 6/6 [==============================] - 0s 342us/sample - loss: 5.0471e-06 Epoch 445/500 6/6 [==============================] - 0s 281us/sample - loss: 4.9437e-06 Epoch 446/500 6/6 [==============================] - 0s 314us/sample - loss: 4.8420e-06 Epoch 447/500 6/6 [==============================] - 0s 357us/sample - loss: 4.7424e-06 Epoch 448/500 6/6 [==============================] - 0s 307us/sample - loss: 4.6447e-06 Epoch 449/500 6/6 [==============================] - 0s 326us/sample - loss: 4.5494e-06 Epoch 450/500 6/6 [==============================] - 0s 347us/sample - loss: 4.4561e-06 Epoch 451/500 6/6 [==============================] - 0s 355us/sample - loss: 4.3647e-06 Epoch 452/500 6/6 [==============================] - 0s 352us/sample - loss: 4.2747e-06 Epoch 453/500 6/6 [==============================] - 0s 341us/sample - loss: 4.1872e-06 Epoch 454/500 6/6 [==============================] - 0s 311us/sample - loss: 4.1009e-06 Epoch 455/500 6/6 [==============================] - 0s 264us/sample - loss: 4.0170e-06 Epoch 456/500 6/6 [==============================] - 0s 285us/sample - loss: 3.9344e-06 Epoch 457/500 6/6 [==============================] - 0s 298us/sample - loss: 3.8532e-06 Epoch 458/500 6/6 [==============================] - 0s 262us/sample - loss: 3.7744e-06 Epoch 459/500 6/6 [==============================] - 0s 307us/sample - loss: 3.6966e-06 Epoch 460/500 6/6 [==============================] - 0s 322us/sample - loss: 3.6206e-06 Epoch 461/500 6/6 [==============================] - 0s 277us/sample - loss: 3.5462e-06 Epoch 462/500 6/6 [==============================] - 0s 282us/sample - loss: 3.4736e-06 Epoch 463/500 6/6 [==============================] - 0s 295us/sample - loss: 3.4022e-06 Epoch 464/500 6/6 [==============================] - 0s 268us/sample - loss: 3.3321e-06 Epoch 465/500 6/6 [==============================] - 0s 273us/sample - loss: 3.2640e-06 Epoch 466/500 6/6 [==============================] - 0s 461us/sample - loss: 3.1969e-06 Epoch 467/500 6/6 [==============================] - 0s 296us/sample - loss: 3.1314e-06 Epoch 468/500 6/6 [==============================] - 0s 353us/sample - loss: 3.0670e-06 Epoch 469/500 6/6 [==============================] - 0s 310us/sample - loss: 3.0040e-06 Epoch 470/500 6/6 [==============================] - 0s 350us/sample - loss: 2.9423e-06 Epoch 471/500 6/6 [==============================] - 0s 356us/sample - loss: 2.8817e-06 Epoch 472/500 6/6 [==============================] - 0s 295us/sample - loss: 2.8224e-06 Epoch 473/500 6/6 [==============================] - 0s 299us/sample - loss: 2.7645e-06 Epoch 474/500 6/6 [==============================] - 0s 308us/sample - loss: 2.7075e-06 Epoch 475/500 6/6 [==============================] - 0s 274us/sample - loss: 2.6518e-06 Epoch 476/500 6/6 [==============================] - 0s 268us/sample - loss: 2.5971e-06 Epoch 477/500 6/6 [==============================] - 0s 393us/sample - loss: 2.5439e-06 Epoch 478/500 6/6 [==============================] - 0s 361us/sample - loss: 2.4913e-06 Epoch 479/500 6/6 [==============================] - 0s 291us/sample - loss: 2.4405e-06 Epoch 480/500 6/6 [==============================] - 0s 330us/sample - loss: 2.3906e-06 Epoch 481/500 6/6 [==============================] - 0s 280us/sample - loss: 2.3414e-06 Epoch 482/500 6/6 [==============================] - 0s 268us/sample - loss: 2.2932e-06 Epoch 483/500 6/6 [==============================] - 0s 268us/sample - loss: 2.2458e-06 Epoch 484/500 6/6 [==============================] - 0s 279us/sample - loss: 2.1997e-06 Epoch 485/500 6/6 [==============================] - 0s 243us/sample - loss: 2.1546e-06 Epoch 486/500 6/6 [==============================] - 0s 292us/sample - loss: 2.1106e-06 Epoch 487/500 6/6 [==============================] - 0s 319us/sample - loss: 2.0672e-06 Epoch 488/500 6/6 [==============================] - 0s 273us/sample - loss: 2.0247e-06 Epoch 489/500 6/6 [==============================] - 0s 267us/sample - loss: 1.9831e-06 Epoch 490/500 6/6 [==============================] - 0s 283us/sample - loss: 1.9421e-06 Epoch 491/500 6/6 [==============================] - 0s 270us/sample - loss: 1.9024e-06 Epoch 492/500 6/6 [==============================] - 0s 261us/sample - loss: 1.8632e-06 Epoch 493/500 6/6 [==============================] - 0s 318us/sample - loss: 1.8249e-06 Epoch 494/500 6/6 [==============================] - 0s 276us/sample - loss: 1.7874e-06 Epoch 495/500 6/6 [==============================] - 0s 257us/sample - loss: 1.7507e-06 Epoch 496/500 6/6 [==============================] - 0s 263us/sample - loss: 1.7147e-06 Epoch 497/500 6/6 [==============================] - 0s 304us/sample - loss: 1.6797e-06 Epoch 498/500 6/6 [==============================] - 0s 302us/sample - loss: 1.6452e-06 Epoch 499/500 6/6 [==============================] - 0s 304us/sample - loss: 1.6113e-06 Epoch 500/500 6/6 [==============================] - 0s 369us/sample - loss: 1.5780e-06

<tensorflow.python.keras.callbacks.History at 0x7fb86422d7f0>

print(model.predict([10]))

[[30.996334]]

model.evaluate([10, 11], [31, 34])

2/1 [============================================================] - 0s 19ms/sample - loss: 1.5523e-05

1.5523408364970237e-05

Section 2: Introduction to Computer Vision¶

In the previous section, we have seen how to use neural networks to map the relationship between two variables (i.e., x and y).

However, in that instance, it is a bit of overkill because it would have been easier to write the funtion $$y = 3x + 1$$ , insteal of using ML to learn the relationship bwtween $x$ and $y$ for a fixed set of values.

But what if we are dealing with a case where writing rules like $y = 3x + 1$ is much more difficult? Computre Vision problems serve as good examples in this case. Let's take a look at a scenario where we want to recognize different items of clothing, trained from a dataset containing 10 different types.

A new dataset: Fashion MNIST¶

We will train a neural network to recognize items of clothing from a common dataset called Fashion MNIST. You can learn more about this dataset here.

It contains 70,000 items of clothing in 10 different categories. Each item of clothing is in a 28x28 greyscale image. You can see some examples here:

![]()

# The Fashion MNIST data is available directly in the tf.keras.datasets API.

mnist_dataset = tf.keras.datasets.fashion_mnist

# The load_data() function of this object will give two sets of two lists, which will be the training and testing data

(X_train, y_train), (X_test, y_test) = mnist_dataset.load_data()

print("Shape of X_train = ", X_train.shape)

print("Shape of y_train = ", y_train.shape)

print("Shape of X_test = ", X_test.shape)

print("Shape of y_test = ", y_test.shape)

Shape of X_train = (60000, 28, 28) Shape of y_train = (60000,) Shape of X_test = (10000, 28, 28) Shape of y_test = (10000,)

print(y_train[0])

print(X_train[0])

9

[[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 13 73 0

0 1 4 0 0 0 0 1 1 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 3 0 36 136 127 62

54 0 0 0 1 3 4 0 0 3]

[ 0 0 0 0 0 0 0 0 0 0 0 0 6 0 102 204 176 134

144 123 23 0 0 0 0 12 10 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 155 236 207 178

107 156 161 109 64 23 77 130 72 15]

[ 0 0 0 0 0 0 0 0 0 0 0 1 0 69 207 223 218 216

216 163 127 121 122 146 141 88 172 66]

[ 0 0 0 0 0 0 0 0 0 1 1 1 0 200 232 232 233 229

223 223 215 213 164 127 123 196 229 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 183 225 216 223 228

235 227 224 222 224 221 223 245 173 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 193 228 218 213 198

180 212 210 211 213 223 220 243 202 0]

[ 0 0 0 0 0 0 0 0 0 1 3 0 12 219 220 212 218 192

169 227 208 218 224 212 226 197 209 52]

[ 0 0 0 0 0 0 0 0 0 0 6 0 99 244 222 220 218 203

198 221 215 213 222 220 245 119 167 56]

[ 0 0 0 0 0 0 0 0 0 4 0 0 55 236 228 230 228 240

232 213 218 223 234 217 217 209 92 0]

[ 0 0 1 4 6 7 2 0 0 0 0 0 237 226 217 223 222 219

222 221 216 223 229 215 218 255 77 0]

[ 0 3 0 0 0 0 0 0 0 62 145 204 228 207 213 221 218 208

211 218 224 223 219 215 224 244 159 0]

[ 0 0 0 0 18 44 82 107 189 228 220 222 217 226 200 205 211 230

224 234 176 188 250 248 233 238 215 0]

[ 0 57 187 208 224 221 224 208 204 214 208 209 200 159 245 193 206 223

255 255 221 234 221 211 220 232 246 0]

[ 3 202 228 224 221 211 211 214 205 205 205 220 240 80 150 255 229 221

188 154 191 210 204 209 222 228 225 0]

[ 98 233 198 210 222 229 229 234 249 220 194 215 217 241 65 73 106 117

168 219 221 215 217 223 223 224 229 29]

[ 75 204 212 204 193 205 211 225 216 185 197 206 198 213 240 195 227 245

239 223 218 212 209 222 220 221 230 67]

[ 48 203 183 194 213 197 185 190 194 192 202 214 219 221 220 236 225 216

199 206 186 181 177 172 181 205 206 115]

[ 0 122 219 193 179 171 183 196 204 210 213 207 211 210 200 196 194 191

195 191 198 192 176 156 167 177 210 92]

[ 0 0 74 189 212 191 175 172 175 181 185 188 189 188 193 198 204 209

210 210 211 188 188 194 192 216 170 0]

[ 2 0 0 0 66 200 222 237 239 242 246 243 244 221 220 193 191 179

182 182 181 176 166 168 99 58 0 0]

[ 0 0 0 0 0 0 0 40 61 44 72 41 35 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0]]

Visualizing the dataset¶

import matplotlib.pyplot as plt

plt.imshow(X_train[0], cmap='gray')

plt.show()

Note that the values in the image are between 0 and 255. When training a neural network, it is easier that if we can convert every values to between 0 and 1, which is a process normally referred to as normalizing.

print("Before normalizing: ")

print("\t min of train is {}, max of train is {}".format(np.min(X_train), np.max(X_train)))

print("\t min of test is {}, max of test is {}".format(np.min(X_test), np.max(X_test)))

X_train = X_train/255

X_test = X_test/255

print("After normalizing: ")

print("\t min of train is {}, max of train is {}".format(np.min(X_train), np.max(X_train)))

print("\t min of test is {}, max of test is {}".format(np.min(X_test), np.max(X_test)))

Before normalizing: min of train is 0, max of train is 255 min of test is 0, max of test is 255 After normalizing: min of train is 0.0, max of train is 1.0 min of test is 0.0, max of test is 1.0

Define a Simple Model¶

# define the model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(10, activation=tf.nn.softmax))

Compile the Model¶

# compile the model

model.compile(optimizer = tf.optimizers.SGD(),

loss = 'sparse_categorical_crossentropy',

metrics=['accuracy'])

Train the model¶

# train for 5 epochs

model.fit(X_train, y_train, epochs=5)

Train on 60000 samples Epoch 1/5 60000/60000 [==============================] - 3s 43us/sample - loss: 0.7477 - accuracy: 0.7619 Epoch 2/5 60000/60000 [==============================] - 2s 39us/sample - loss: 0.5149 - accuracy: 0.8245 Epoch 3/5 60000/60000 [==============================] - 2s 39us/sample - loss: 0.4687 - accuracy: 0.8370 Epoch 4/5 60000/60000 [==============================] - 2s 39us/sample - loss: 0.4434 - accuracy: 0.8463 Epoch 5/5 60000/60000 [==============================] - 2s 39us/sample - loss: 0.4262 - accuracy: 0.8529

<tensorflow.python.keras.callbacks.History at 0x7fb8836002e8>

Prediction and evaluation¶

# prediction on unseen data

classifications = model.predict(X_test)

print(classifications[0])

print("True label is ", y_test[0])

print("The predicted label is ", np.argmax(classifications[0]))

[4.0976588e-06 4.8142583e-06 1.8533812e-05 7.6556162e-06 3.0518473e-05 1.0107300e-01 7.4795840e-05 2.0220113e-01 4.4535613e-03 6.9213188e-01] True label is 9 The predicted label is 9

# evaluation on test set

loss, acc = model.evaluate(X_test, y_test, verbose=0)

print("loss on test set is : ", loss)

print("accuracy on test set is : ", acc)

loss on test set is : 0.44848056111335755 accuracy on test set is : 0.8416

More Neurons!¶

# the data

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

X_train = X_train/255.0

X_test = X_test/255.0

# define the model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(1024, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(10, activation=tf.nn.softmax))

# compile the model

model.compile(optimizer = tf.optimizers.SGD(),

loss = 'sparse_categorical_crossentropy',

metrics=['accuracy'])

# train the model

model.fit(X_train, y_train, epochs=5)

Train on 60000 samples Epoch 1/5 60000/60000 [==============================] - 3s 44us/sample - loss: 0.7002 - accuracy: 0.7727 Epoch 2/5 60000/60000 [==============================] - 2s 39us/sample - loss: 0.4927 - accuracy: 0.8332 Epoch 3/5 60000/60000 [==============================] - 3s 44us/sample - loss: 0.4516 - accuracy: 0.8464 Epoch 4/5 60000/60000 [==============================] - 2s 40us/sample - loss: 0.4269 - accuracy: 0.8536 Epoch 5/5 60000/60000 [==============================] - 2s 40us/sample - loss: 0.4094 - accuracy: 0.8588

<tensorflow.python.keras.callbacks.History at 0x7fb83c4ddac8>

# a single prediction

predictions = model.predict(X_test)

print(predictions[0])

print("The predicted label is ", np.argmax(predictions[0]))

[3.9984065e-05 1.0286058e-05 6.5077933e-05 3.2205684e-05 4.0681407e-05 1.2416209e-01 8.3608582e-05 2.0007513e-01 7.2584585e-03 6.6823244e-01] The predicted label is 9

print("True label is ", y_test[0])

True label is 9

# evaluation on test set

loss, acc = model.evaluate(X_test, y_test, verbose=0)

print("loss on test set is : ", loss)

print("accuracy on test set is : ", acc)

loss on test set is : 0.43644546914100646 accuracy on test set is : 0.8483

Let's go DEEP! More Layers!¶

# get the data

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

X_train = X_train/255.0

X_test = X_test/255.0

# define the model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(1024, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(128, activation=tf.nn.relu))

model.add(tf.keras.layers.Dense(10, activation=tf.nn.softmax))

# compile the model

model.compile(optimizer = tf.optimizers.SGD(),

loss = 'sparse_categorical_crossentropy',

metrics=['accuracy'])

# train the model

model.fit(X_train, y_train, epochs=5)

Train on 60000 samples Epoch 1/5 60000/60000 [==============================] - 3s 45us/sample - loss: 0.6584 - accuracy: 0.7837 Epoch 2/5 60000/60000 [==============================] - 3s 42us/sample - loss: 0.4640 - accuracy: 0.8404 Epoch 3/5 60000/60000 [==============================] - 2s 41us/sample - loss: 0.4219 - accuracy: 0.8521 Epoch 4/5 60000/60000 [==============================] - 3s 42us/sample - loss: 0.3964 - accuracy: 0.8610 Epoch 5/5 60000/60000 [==============================] - 3s 42us/sample - loss: 0.3745 - accuracy: 0.8699

<tensorflow.python.keras.callbacks.History at 0x7fb8044eabe0>

# a single prediction

classifications = model.predict(X_test)

print(classifications[0])

print("True label is ", y_test[0])

print("The predicted label is ", np.argmax(classifications[0]))

[1.0156216e-04 5.8672558e-06 6.8080219e-05 4.3952998e-05 5.2518335e-05 7.3960766e-02 2.0629779e-04 1.3262554e-01 1.0721110e-02 7.8221434e-01] True label is 9 The predicted label is 9

# evaluation on test set

loss, acc = model.evaluate(X_test, y_test, verbose=0)

print("loss on test set is : ", loss)

print("accuracy on test set is : ", acc)

loss on test set is : 0.4090584322690964 accuracy on test set is : 0.8535

Section 3: Convolutions¶

Limitations of the previous DNN¶

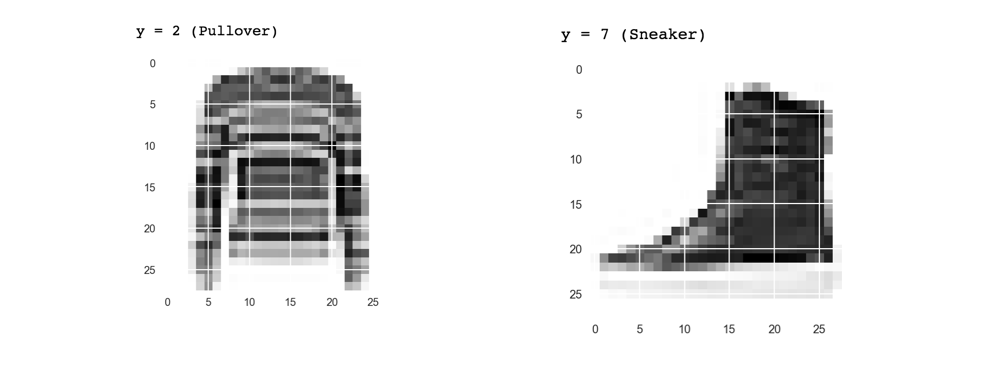

In the previous section, we saw how to train an image classifier for fashion items using the Fashion MNIST dataset.

This gave us a pretty accuract classifier, but there was an obvious constraint: the images were 28x28, grey scale and the item was centered in the image.

For example here are a couple of the images in Fashion MNIST

The DNN that you created simply learned from the raw pixels what made up a sweater, and what made up a boot in this context. But consider how it might classify this image?

While it's clear that there are boots in this image, the classifier would fail for a number of reasons. First, of course, it's not 28x28 greyscale, but more importantly, the classifier was trained on the raw pixels of a left-facing boot, and not the features that make up what a boot is.

That's where Convolutions are very powerful. A convolution is a filter that passes over an image, processing it, and extracting features that show a commonolatity in the image. In this lab you'll see how they work, but processing an image to see if you can extract features from it!

Generating convolutions is very simple -- we simply scan every pixel in the image and then look at it's neighboring pixels. You multiply out the values of these pixels by the equivalent weights in a filter.

So, for example, consider this:

Let's explore a bit¶

import cv2

import numpy as np

from scipy import misc

i = misc.ascent()

import matplotlib.pyplot as plt

plt.grid(False)

plt.gray()

plt.axis('off')

plt.imshow(i)

plt.show()

Now we can create a filter as a 3x3 array.

# This filter detects edges nicely

# It creates a convolution that only passes through sharp edges and straight

# lines.

#Experiment with different values for fun effects.

#filter = [ [0, 1, 0], [1, -4, 1], [0, 1, 0]]

# A couple more filters to try for fun!

filter = [ [-1, -2, -1], [0, 0, 0], [1, 2, 1]]

#filter = [ [-1, 0, 1], [-2, 0, 2], [-1, 0, 1]]

# If all the digits in the filter don't add up to 0 or 1, you

# should probably do a weight to get it to do so

# so, for example, if your weights are 1,1,1 1,2,1 1,1,1

# They add up to 10, so you would set a weight of .1 if you want to normalize them

weight = 1

i_transformed = np.copy(i)

size_x = i_transformed.shape[0]

size_y = i_transformed.shape[1]

for x in range(1,size_x-1):

for y in range(1,size_y-1):

convolution = 0.0

convolution = convolution + (i[x - 1, y-1] * filter[0][0])

convolution = convolution + (i[x, y-1] * filter[0][1])

convolution = convolution + (i[x + 1, y-1] * filter[0][2])

convolution = convolution + (i[x-1, y] * filter[1][0])

convolution = convolution + (i[x, y] * filter[1][1])

convolution = convolution + (i[x+1, y] * filter[1][2])

convolution = convolution + (i[x-1, y+1] * filter[2][0])

convolution = convolution + (i[x, y+1] * filter[2][1])

convolution = convolution + (i[x+1, y+1] * filter[2][2])

convolution = convolution * weight

if(convolution<0):

convolution=0

if(convolution>255):

convolution=255

i_transformed[x, y] = convolution

# Plot the image. Note the size of the axes -- they are 512 by 512

plt.gray()

plt.grid(False)

plt.imshow(i_transformed)

#plt.axis('off')

plt.show()

So, consider the following filter values, and their impact on the image.

Using -1,0,1,-2,0,2,-1,0,1 gives us a very strong set of vertical lines:

Using -1, -2, -1, 0, 0, 0, 1, 2, 1 gives us horizontal lines:

Pooling¶

This code will show a (2, 2) pooling.Run it to see the output, and you'll see that while the image is 1/4 the size of the original, the extracted features are maintained!

new_x = int(size_x/2)

new_y = int(size_y/2)

newImage = np.zeros((new_x, new_y))

for x in range(0, size_x, 2):

for y in range(0, size_y, 2):

pixels = []

pixels.append(i_transformed[x, y])

pixels.append(i_transformed[x+1, y])

pixels.append(i_transformed[x, y+1])

pixels.append(i_transformed[x+1, y+1])

pixels.sort(reverse=True)

newImage[int(x/2),int(y/2)] = pixels[0]

# Plot the image. Note the size of the axes -- now 256 pixels instead of 512

plt.gray()

plt.grid(False)

plt.imshow(newImage)

#plt.axis('off')

plt.show()

Section 4: Improving accuracy using Convolutions¶

import tensorflow as tf

mnist = tf.keras.datasets.fashion_mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train = X_train / 255.0

X_test = X_test / 255.0

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation=tf.nn.relu),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)

])

model.compile(optimizer=tf.optimizers.Adam(), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.fit(X_train, y_train, epochs=5)

loss, acc = model.evaluate(X_test, y_test, verbose=0)

print("Loss on test set is ", loss)

print("Accuracy on test set is", acc)

Train on 60000 samples Epoch 1/5 60000/60000 [==============================] - 3s 44us/sample - loss: 0.4976 - accuracy: 0.8247 Epoch 2/5 60000/60000 [==============================] - 2s 41us/sample - loss: 0.3775 - accuracy: 0.8632 Epoch 3/5 60000/60000 [==============================] - 2s 41us/sample - loss: 0.3360 - accuracy: 0.8785 Epoch 4/5 60000/60000 [==============================] - 3s 43us/sample - loss: 0.3133 - accuracy: 0.8858 Epoch 5/5 60000/60000 [==============================] - 3s 43us/sample - loss: 0.2966 - accuracy: 0.8908 Loss on test set is 0.342407232940197 Accuracy on test set is 0.8753

# prepare the dataset

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.fashion_mnist.load_data()

X_train = X_train.reshape(60000, 28, 28, 1)

X_train = X_train / 255.0

X_test = X_test.reshape(10000, 28, 28, 1)

X_test = X_test / 255.0

# define the model

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Conv2D(64, (3,3), activation='relu', input_shape=(28, 28, 1)))

model.add(tf.keras.layers.MaxPooling2D(2, 2))

model.add(tf.keras.layers.Conv2D(64, (3,3), activation='relu'))

model.add(tf.keras.layers.MaxPooling2D(2,2))

model.add(tf.keras.layers.Flatten())

model.add(tf.keras.layers.Dense(128, activation='relu'))

model.add(tf.keras.layers.Dense(10, activation='softmax'))

# complie the model

model.compile(optimizer=tf.optimizers.Adam(), loss='sparse_categorical_crossentropy', metrics=['accuracy'])

# model summary

model.summary()

Model: "sequential_10" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d_2 (Conv2D) (None, 26, 26, 64) 640 _________________________________________________________________ max_pooling2d_2 (MaxPooling2 (None, 13, 13, 64) 0 _________________________________________________________________ conv2d_3 (Conv2D) (None, 11, 11, 64) 36928 _________________________________________________________________ max_pooling2d_3 (MaxPooling2 (None, 5, 5, 64) 0 _________________________________________________________________ flatten_9 (Flatten) (None, 1600) 0 _________________________________________________________________ dense_21 (Dense) (None, 128) 204928 _________________________________________________________________ dense_22 (Dense) (None, 10) 1290 ================================================================= Total params: 243,786 Trainable params: 243,786 Non-trainable params: 0 _________________________________________________________________

# train the model

model.fit(X_train, y_train, epochs=5)

Train on 60000 samples Epoch 1/5 60000/60000 [==============================] - 5s 91us/sample - loss: 0.4334 - accuracy: 0.8451 Epoch 2/5 60000/60000 [==============================] - 4s 70us/sample - loss: 0.2891 - accuracy: 0.8938 Epoch 3/5 60000/60000 [==============================] - 4s 71us/sample - loss: 0.2455 - accuracy: 0.9094 Epoch 4/5 60000/60000 [==============================] - 4s 71us/sample - loss: 0.2115 - accuracy: 0.9210 Epoch 5/5 60000/60000 [==============================] - 4s 71us/sample - loss: 0.1854 - accuracy: 0.9306

<tensorflow.python.keras.callbacks.History at 0x7fb80c146668>

loss, acc = model.evaluate(X_test, y_test, verbose=0)

print("Loss on test set is ", loss)

print("Accuracy on test set is", acc)

Loss on test set is 0.2482373044013977 Accuracy on test set is 0.9117

Section 5: Using Convolutions with Complex Images¶

In Fashion MNIST classification, the subject is always in the center of a 28x28 image.

In this section, we will take it to the next level, training to recognize features in an image where the subject can be anywhere in the image!

Building a horses-or-humans classifier that will tell you if a given image contains a horse or a human, where the network is trained to recognize features that determine which is which.

Get the dataset¶

!wget --no-check-certificate \

https://storage.googleapis.com/laurencemoroney-blog.appspot.com/horse-or-human.zip \

-O ./data/horse-or-human.zip

--2019-11-20 22:50:34-- https://storage.googleapis.com/laurencemoroney-blog.appspot.com/horse-or-human.zip Resolving storage.googleapis.com (storage.googleapis.com)... 216.58.207.240, 2a00:1450:400f:80c::2010 Connecting to storage.googleapis.com (storage.googleapis.com)|216.58.207.240|:443... connected. HTTP request sent, awaiting response... 200 OK Length: 149574867 (143M) [application/zip] Saving to: ‘./data/horse-or-human.zip’ ./data/horse-or-hum 100%[===================>] 142.65M 11.6MB/s in 13s 2019-11-20 22:50:49 (10.8 MB/s) - ‘./data/horse-or-human.zip’ saved [149574867/149574867]

import os

import zipfile

local_zip = './data/horse-or-human.zip'

zip_ref = zipfile.ZipFile(local_zip, 'r')

zip_ref.extractall('./data/horse-or-human')

zip_ref.close()

# Directory with our training horse pictures

train_horse_dir = os.path.join('./data/horse-or-human/horses')

# Directory with our training human pictures

train_human_dir = os.path.join('./data/horse-or-human/humans')

# a pick view of the data

train_horse_names = os.listdir(train_horse_dir)

print(train_horse_names[:10])

train_human_names = os.listdir(train_human_dir)

print(train_human_names[:10])

['horse21-2.png', 'horse42-5.png', 'horse32-4.png', 'horse34-3.png', 'horse09-6.png', 'horse37-4.png', 'horse17-6.png', 'horse47-1.png', 'horse11-3.png', 'horse36-8.png'] ['human14-14.png', 'human04-19.png', 'human09-13.png', 'human14-27.png', 'human16-28.png', 'human17-24.png', 'human17-25.png', 'human01-09.png', 'human13-30.png', 'human06-28.png']

print('total training horse images:', len(os.listdir(train_horse_dir)))

print('total training human images:', len(os.listdir(train_human_dir)))

total training horse images: 500 total training human images: 527

Visualization¶

%matplotlib inline

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

# Parameters for our graph; we'll output images in a 4x4 configuration

nrows = 4

ncols = 4

# Index for iterating over images

pic_index = 0

# Set up matplotlib fig, and size it to fit 4x4 pics

fig = plt.gcf()

fig.set_size_inches(ncols * 4, nrows * 4)

pic_index += 8

next_horse_pix = [os.path.join(train_horse_dir, fname)

for fname in train_horse_names[pic_index-8:pic_index]]

next_human_pix = [os.path.join(train_human_dir, fname)

for fname in train_human_names[pic_index-8:pic_index]]

<Figure size 1152x1152 with 0 Axes>

for i, img_path in enumerate(next_horse_pix+next_human_pix):

# Set up subplot; subplot indices start at 1

sp = plt.subplot(nrows, ncols, i + 1)

sp.axis('Off') # Don't show axes (or gridlines)

img = mpimg.imread(img_path)

plt.imshow(img)

plt.show()

Data Preprocessing¶

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# All images will be rescaled by 1./255

train_datagen = ImageDataGenerator(rescale=1/255)

# Flow training images in batches of 128 using train_datagen generator

train_generator = train_datagen.flow_from_directory(

'./data/horse-or-human/', # This is the source directory for training images

target_size=(300, 300), # All images will be resized to 150x150

batch_size=128,

# Since we use binary_crossentropy loss, we need binary labels

class_mode='binary')

Found 1027 images belonging to 2 classes.

Building a Small Model from Scratch¶

import tensorflow as tf

model = tf.keras.models.Sequential([

# Note the input shape is the desired size of the image 300x300 with 3 bytes color

# This is the first convolution

tf.keras.layers.Conv2D(16, (3,3), activation='relu', input_shape=(300, 300, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

# The second convolution

tf.keras.layers.Conv2D(32, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The third convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The fourth convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# The fifth convolution

tf.keras.layers.Conv2D(64, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D(2,2),

# Flatten the results to feed into a DNN

tf.keras.layers.Flatten(),

# 512 neuron hidden layer

tf.keras.layers.Dense(512, activation='relu'),

# Only 1 output neuron. It will contain a value from 0-1 where 0 for 1 class ('horses') and 1 for the other ('humans')

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.summary()

Model: "sequential" _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= conv2d (Conv2D) (None, 298, 298, 16) 448 _________________________________________________________________ max_pooling2d (MaxPooling2D) (None, 149, 149, 16) 0 _________________________________________________________________ conv2d_1 (Conv2D) (None, 147, 147, 32) 4640 _________________________________________________________________ max_pooling2d_1 (MaxPooling2 (None, 73, 73, 32) 0 _________________________________________________________________ conv2d_2 (Conv2D) (None, 71, 71, 64) 18496 _________________________________________________________________ max_pooling2d_2 (MaxPooling2 (None, 35, 35, 64) 0 _________________________________________________________________ conv2d_3 (Conv2D) (None, 33, 33, 64) 36928 _________________________________________________________________ max_pooling2d_3 (MaxPooling2 (None, 16, 16, 64) 0 _________________________________________________________________ conv2d_4 (Conv2D) (None, 14, 14, 64) 36928 _________________________________________________________________ max_pooling2d_4 (MaxPooling2 (None, 7, 7, 64) 0 _________________________________________________________________ flatten (Flatten) (None, 3136) 0 _________________________________________________________________ dense (Dense) (None, 512) 1606144 _________________________________________________________________ dense_1 (Dense) (None, 1) 513 ================================================================= Total params: 1,704,097 Trainable params: 1,704,097 Non-trainable params: 0 _________________________________________________________________

from tensorflow.keras.optimizers import RMSprop

# compile the model

model.compile(loss='binary_crossentropy',

optimizer=RMSprop(lr=0.001),

metrics=['acc'])

Train the model¶

history = model.fit_generator(

train_generator,

steps_per_epoch=8,

epochs=15,

verbose=1)

Epoch 1/15 8/8 [==============================] - 5s 675ms/step - loss: 0.8957 - acc: 0.4917 Epoch 2/15 8/8 [==============================] - 5s 576ms/step - loss: 0.7593 - acc: 0.6085 Epoch 3/15 8/8 [==============================] - 5s 588ms/step - loss: 0.4911 - acc: 0.7809 Epoch 4/15 8/8 [==============================] - 5s 607ms/step - loss: 0.6424 - acc: 0.7964 Epoch 5/15 8/8 [==============================] - 5s 647ms/step - loss: 0.1733 - acc: 0.9266 Epoch 6/15 8/8 [==============================] - 5s 666ms/step - loss: 0.2128 - acc: 0.9248 Epoch 7/15 8/8 [==============================] - 5s 596ms/step - loss: 0.1706 - acc: 0.9344 Epoch 8/15 8/8 [==============================] - 5s 586ms/step - loss: 0.3964 - acc: 0.8743 Epoch 9/15 8/8 [==============================] - 5s 584ms/step - loss: 0.3021 - acc: 0.8877 Epoch 10/15 8/8 [==============================] - 5s 576ms/step - loss: 0.1190 - acc: 0.9577 Epoch 11/15 8/8 [==============================] - 5s 657ms/step - loss: 0.0413 - acc: 0.9863 Epoch 12/15 8/8 [==============================] - 4s 497ms/step - loss: 0.0378 - acc: 0.9858 Epoch 13/15 8/8 [==============================] - 5s 578ms/step - loss: 0.6119 - acc: 0.8910 Epoch 14/15 8/8 [==============================] - 5s 651ms/step - loss: 0.0474 - acc: 0.9854 Epoch 15/15 8/8 [==============================] - 5s 579ms/step - loss: 0.0119 - acc: 0.9989

Runing the Model¶

import numpy as np

# from google.colab import files

from keras.preprocessing import image

# uploaded = files.upload()

for fn in uploaded.keys():

# predicting images

path = '/content/' + fn

img = image.load_img(path, target_size=(300, 300))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

print(classes[0])

if classes[0]>0.5:

print(fn + " is a human")

else:

print(fn + " is a horse")

Using TensorFlow backend.

--------------------------------------- NameErrorTraceback (most recent call last) <ipython-input-83-5d2d39299a71> in <module> 5 # uploaded = files.upload() 6 ----> 7 for fn in uploaded.keys(): 8 9 # predicting images NameError: name 'uploaded' is not defined

Visualizing Intermediate Representations¶

import numpy as np

import random

from tensorflow.keras.preprocessing.image import img_to_array, load_img

# Let's define a new Model that will take an image as input, and will output

# intermediate representations for all layers in the previous model after

# the first.

successive_outputs = [layer.output for layer in model.layers[1:]]

#visualization_model = Model(img_input, successive_outputs)

visualization_model = tf.keras.models.Model(inputs = model.input, outputs = successive_outputs)

# Let's prepare a random input image from the training set.

horse_img_files = [os.path.join(train_horse_dir, f) for f in train_horse_names]

human_img_files = [os.path.join(train_human_dir, f) for f in train_human_names]

img_path = random.choice(horse_img_files + human_img_files)

img = load_img(img_path, target_size=(300, 300)) # this is a PIL image

x = img_to_array(img) # Numpy array with shape (150, 150, 3)

x = x.reshape((1,) + x.shape) # Numpy array with shape (1, 150, 150, 3)

# Rescale by 1/255

x /= 255

# Let's run our image through our network, thus obtaining all

# intermediate representations for this image.

successive_feature_maps = visualization_model.predict(x)

# These are the names of the layers, so can have them as part of our plot

layer_names = [layer.name for layer in model.layers]

# Now let's display our representations

for layer_name, feature_map in zip(layer_names, successive_feature_maps):

if len(feature_map.shape) == 4:

# Just do this for the conv / maxpool layers, not the fully-connected layers

n_features = feature_map.shape[-1] # number of features in feature map

# The feature map has shape (1, size, size, n_features)

size = feature_map.shape[1]

# We will tile our images in this matrix

display_grid = np.zeros((size, size * n_features))

for i in range(n_features):

# Postprocess the feature to make it visually palatable

x = feature_map[0, :, :, i]

x -= x.mean()

x /= x.std()

x *= 64

x += 128

x = np.clip(x, 0, 255).astype('uint8')

# We'll tile each filter into this big horizontal grid

display_grid[:, i * size : (i + 1) * size] = x

# Display the grid

scale = 20. / n_features

plt.figure(figsize=(scale * n_features, scale))

plt.title(layer_name)

plt.grid(False)

plt.imshow(display_grid, aspect='auto', cmap='viridis')

/home/bw/.local/lib/python3.6/site-packages/ipykernel_launcher.py:43: RuntimeWarning: invalid value encountered in true_divide